Polling FAQ

Questions

- Who conducts polls?

- How are polls conducted?

- How does the pollster decide who to call?

- Do pollsters call cell phones?

- Do pollsters call VoIP phones?

- Do pollsters miss any other large groups because cell users?

- Can polls be automated?

- So polls done by human beings are more reliable?

- Can polls be conducted over the Internet?

- Does it matter when the poll is conducted?

- Can they tell who will actually vote?

- What does 'margin of error' mean?

- What are methodolgical errors?

- Do pollsters present the actual numbers observed?

- Which polls do you use?

- What algorithm (formula) is used to compute the map?

- Should I believe poll results?

Who Conducts Polls?

Polling companies. These are specialized companies (part of) whose business is conducting public opinion polls. There are a few polling companies that operate nationally and many local ones. A number of universities also have a polling institute since they have an abundance of the key ingredient: cheap labor. The websites of some of the major polling organizations are listed below. Note that most of them do not give much useful data for free. To get the numbers, you have to buy a subscription, in which case a wealth of data is provided.- American Research Group

- The Gallup Poll

- Mason-Dixon

- Quinnipiac University Polling Institute

- Rasmussen Reports

- SurveyUSA

- Zogby

How are polls conducted?

Typically, the customer contacts the polling organization and places an order for a poll, specifying what questions are to be asked, when the poll will be conducted, how many people will be interviewed, and how the results will be presented. The pollster then calls the agreed upon number of people, asks them the questions. Usually, the interview begins with easy questions to assure the respondent that he or she is not going to be embarrassed. For example: "I am going to read you the names of some well-known political figures. For each one, please tell me if you have a favorable, neutral, or unfavorable opinion of that person." Then the names of well-known figures such as George Bush, Hillary Clinton, John McCain, et. are read. The answers are typed into a computer as they are given. When each interviewer has the requisite number of valid interviews, he or she transmits the results to the organization. When the poll is completed, the data are normalized if need be (e.g., to make sure men and women are equally represented), and the pollster prepares a final report, often breaking down the results by age, gender, income, education, political party, and other ways. The more people called, the more accurate the poll, but the more expensive it is. For state polls, 500-1000 valid interviews are typical (but it may take many times these numbers to get 500-1000 valid interviews). A state poll can easily run into the $15,000 ballpark.How does the pollster decide who to call?

All polls are based on the idea of a random sample. Two methods are used to get the sample. The first is called RDD (Random Digit Dialing) in which the pollster carefully chooses an area code and prefix (together. the first six digits of a telephone number) and then picks the next four digits at random. Because of how the telephone is organized, people with the same first six digits generally live close together, although this property is changing. This method generates a good random sample since it hits all in the selected area with equal probability, including unlisted numbers. Unfortunately, it also hits business phones, fax machines, and modems. The second method takes telephone numbers from a telephone book or list and uses them or sometimes randomizes final digit to hit unlisted numbers.Do pollsters call cell phones?

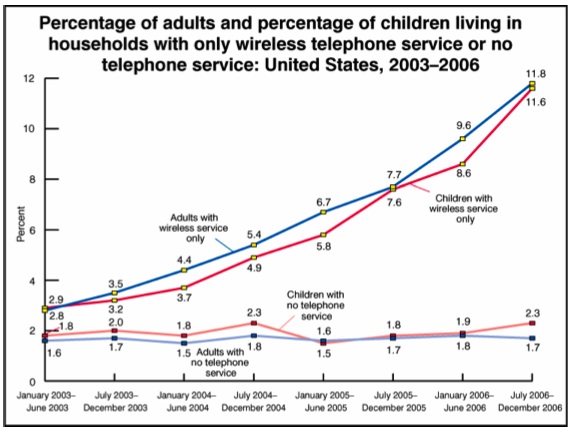

No. It is illegal for them to do so. This fact means that people who have only a cell phone and no land line will be systematically excluded from polls. Since these people tend to be mostly young people, the pollsters intentionally overweight the 18-30 year olds to compensate for this effect, but as more people drop their landlines, it is becoming a serious issue. Here is a report on the issue and below is a graph taken from the report.

Do pollsters call VoIP phones?

Increasingly many people have phone service over cable or on Skype. There is no law against pollsters calling these phones, but it is a bit tricky because customers can often choose any area code they want. Someone living in Seattle can choose a Florida area code so his mother can call him as a local call. When a pollster calls someone with a Florida area code and gets someone in Washington, the accuracy of the poll is not improved.Do pollsters miss any other large groups besides cell users?

Yes. They never, ever poll the 7 million Americans who live overseas (roughly the population of Virginia) even though all of them over 18 are eligible to vote in the state they previously lived in. These people are soldiers, teachers, missionaries, students, businessmen, and spouses of foreign nationals, to name just a few categories. Since they are such a heterogeneous group, they are hard to correct for.Can polls be automated?

A recent development is the use of automated polls. With this technology, the company's computer dials telephone numbers at random and then plays a message recorded by a professional radio announcer. The message asks whoever answers the demographic and political questions, to which they respond by pressing buttons on the telephone. The percentage of people who hang up quickly when this technique is much higher than when a human being conducts the poll. Nevertheless, SurveyUSA and Rasmussen rely heavily on this technique because it is fast and cheap, allowing them to charge less than their competitors in the polling business. Traditional polling companies criticize the methodology on the grounds that it does not adequately filter out teenagers too young to vote but definitely old enough to play games with the system. Chuck Todd, editor of the Hotline, a daily political tipsheet was once called by SurveyUSA and was effortlessly able to pass himself off as a 19-year old Republican Latina, something he could never have done with a human pollster.So polls done by human beings are more reliable?

Not so fast. They have problems, too. Nearly all pollsters outsource the actual calling to third parties who run call centers or use freelancers. These actual callers are part-timers who are poorly trained and hardly motivated. They may have strong accents, mispronounce words, chew gum, read too fast or too slow, or get tired. In some cases, they have been known to make up all the answers without calling anyone at all, a practice known as "curbstoning," something no pollster wants to talks about, except the robopollsters, whose computers have never been caught curbstoning.Can a poll be conducted over the Internet?

Some companies, especially Zogby, have begun pioneering with Internet polls, usually by asking people to sign up. Obviously the sample is anything but random. Also people can lie, but they can lie on telephone polls, too Careful normalization can remove the sampling bias. For example, if 80% of the respondents in an online poll are men and the customer wants an equal number of men and women, the pollster can simply treat each woman's opinion as if four women said the same thing. Also, the online pollsters often poll the same people over and over, which has its advantages. For example, if a person has expressed strong approval for, say, George Bush five months in a row and now expresses strong disapproval, that represents a genuine shift. And, similar, to the robopollsters, every person gets precisely the same questions presented in precisely the same form, without any possible bias introduced by the interviewer.Does it matter when the poll is conducted?

Yes. Calls made Monday through Friday have a larger probability of getting a woman than a man, because there are more housewives than househusbands. Since women are generally more favorable to the Democrats than men are, this effect can introduce bias. Also, calls made Friday evening may miss younger voters, who may be out partying, and thus underweight them in the results. To counteract this effect, some polling companies call for an entire week, instead of the usual three days, but this approach results in polls that do not respond as quickly to events in the news. Others try to normalize their results to include enough men, enough young voters, etc.Can they tell who will actually vote?

Well, they often try. Many polls include questions designed to determine if the person is likely to vote. These may include:- Are you currently registered to vote?

- Did you vote in 2004?

- Did you vote in 2002?

- Do you believe that it is every citizen's duty to vote?

- Do you think your vote matters?

What Does Margin of Error Mean?

There is no concept as confusing as 'Margin of Error.' It is used a lot but few people understand it. Suppose a polling company calls 1000 randomly selected people in a state that is truly divided 50-50 (say Missouri), they may, simply by accident, happen to call 520 Democrats and 480 Republicans and announce that Claire McCaskill is ahead 52% to 48%. But another company on the same day may happen to get 510 Republicans and 490 Democrats and announce that Jim Talent is ahead 51% to 49%. The variation caused by having such a small sample is called the margin of error and is usually between 2% and 4% for the sample sizes used in state polling. With a margin of error of, say, 3%, a reported 51% really means that there is a 95% chance that the true (unknown) percentage of voters favoring McCaskill falls between 48% and 54% [and a 5% chance that it is outside this (two sigma) range].In the first above example, with a 3% MoE, the 95% confidence interval for McCaskill is 49% to 55% and for Talent 45% to 51%. Since these overlap, we cannot be 95% certain that McCaskill is really ahead, so this is called a statistical tie. When the ranges of the candidates do not overlap (i.e., the difference between them is at least twice the margin of error), then we can be 95% certain the leader is really ahead. The margin of error deals ONLY with sampling error--the fact that when you ask 1000 people in an evenly divided state, you are probably not going to get exactly 500 Democrats and 500 Republicans. However, a far greater source of error in all polls is methodological error.

What are methodological errors?

First, there are many ways a polling can give misleading results due to poor methodology. For example, the phrasing of the questions is known to influence the results. Consider the following options:- If the Senate election were held today, would you vote for the Democrat or the Republican?

- If the Senate election were held today, would you vote for the Republican or the Democrat?

- If the Senate election were held today, would you vote for Sherrod Brown or Mike DeWine?

- If the Senate election were held today, would you vote for Mike DeWine or Sherrod Brown?

- If the Senate election were held today, would you vote for Democrat Sherrod Brown or Republican Mike DeWine?

- If the Senate election were held today, would you vote for Republican Mike DeWine or Democrat Sherrod Brown?

- If the Senate election were held today, for whom would you vote?

Second, the sample may not be random. The most famous blunder in polling history occurred during the 1936 presidential election, in which Literary Digest magazine took a telephone poll and concluded that Alf Landon was going to beat Franklin D. Roosevelt in a landslide. Didn't happen. What went wrong? At the height of the Depression, only rich people had telephones, and they were overwhelmingly Republican. But there weren't very many of them. Even now, telephone polls miss people too poor to have telephones, people who don't speak English (well), and people scared of strangers or too busy to deal with pollsters. These effects could systematically bias the results if not corrected for.

Do pollsters present the actual numbers observed?

Virtually never. All pollsters have a demographic model of the population and want their sample to conform to it. With only 500 people in a poll, there might not be enough single young black Republican women or too many married older white Democratic men. Statistical techniques are used to correct for these deficiencies. Some parameters, such as the fraction of voters in a state who are women are well known and not controversial. However, normalizing for political affiliation is very controversial. If a pollster decides that, say, 60% of the voters in some state are Republicans and 40% are Democrats, then even if a poll says the Democrat and Republican are tied at 500 votes each in the sample, he will count the 500 Republicans sampled as 600 and the 500 Democrats sampled as 400 and conclude the Republican is ahead, contradicting the raw data. Some pollsters determine the party affiliations based on the exit polls of the previous election, and some use long running means of their own polling data.Which polls do you use?

First of all, only neutral pollsters are used. Pollsters whose primary business is helping Democrats or Republicans get elected are not used. They tend to make their horse look better. When there are multiple polls for a race, the most recent poll is always used. The middle date of the polling period is what counts (not the release date). If other polls have middle polling dates within a week of the most recent one, they are all averaged together. This tends to smooth out variations. In 2004, this algorithm did the best. Click on Polling data in Excel to the right of the map for all the raw polling data. It is sorted in descending order by polling date (Jan. 1 is 1.0). The fields are separated by commas so you can read the file into Excel.What algorithm (formula) is used to compute the map?

In 2004, we ran three different algorithms. The main page just used the most recent poll. The second algorithm averaged three days' worth of polls using only the nonpartisan pollsters. The third one included a mathematical formula for predicting how undecided voters would break based on historical data. The second one was most stable and gave the best final result, so this time a slightly variation of it is used: The most recent poll is always used, and if any other polls were taken within a week of it, they are all averaged together. This method tends to give a more stable result, so the colors don't jump all over the place due to one unusual poll.Should I believe poll results?

Sometimes. But you should try to get answers to these questions about any polls you see. Unfortunately, some pollsters are completely unethical, as described in this article.Back to the main page.